Recently I've participated in a number of discussions around Virtual SAN and vSphere HA where a couple of great and interesting questions have been brought up with regards to Virtual SAN and vSphere HA interoperability and behavior.

For the most part, the discussions have been around vSphere HA and how it works and supports network partitions and isolation events for Virtual SAN enabled clusters. Those concerns required a bit more detail to provide the adequate technical guidance.

Before diving into the details, let me start with an official statement about Virtual SAN and vSphere HA. vSphere HA fully supports and is integrated with Virtual SAN. This support required some changes in vSphere HA which impact vSphere HA behavior and result in some unique Virtual SAN related configuration considerations for vSphere HA.

In this post I will detail the following information and recommendations:

- Architecture Changes Impacting Isolation and Partition Support

- Heartbeat Datastore Recommendations

- Host Isolation Address Recommendations

- Isolation Response Recommendations

Changing heartbeat datastores Help Request VMware 6.5Six HPE Prolaint DL380 Hosts with ESXi 6.5.0, 7967591HPE 3PAR 7200 Array (in the process of being decommissioned)HPE Nimble Array (replacement for the 3PAR). What is VMware vCenter Server Heartbeat? VMware vCenter Server Heartbeat is a software product that protects vCenter Server against outages–from application, operating system, hardware and network failures to external events–regardless of whether vCenter Server is deployed on a physical or virtual machine. VMware datastore heartbeating provides an additional option for determining whether a host is in a failed state. If the master agent present in a single host in the cluster cannot communicate with a slave (doesn't receives heartbeats), but the heartbeat datastore answers, HA simply knows that the server is still working, but cannot. As far as I can tell the only way you will fail the 'The datastore is not used for vSphere HA heartbeat' check is if you manually specify only 2 datastores then try and decommission one of them. Personally, I've yet to see a really good argument for specifying datastores to be used with HA. Virtual Machines (VM's) hang and/or cannot power on. Problems using the datastore browser. Event messages in vSphere Web Client stating a corrupt heartbeat region detected.

Architecture Changes Impacting Isolation and Partition Support

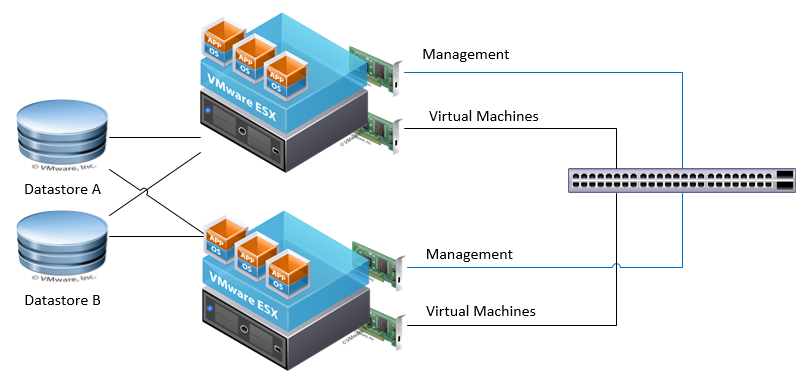

In vSphere 5.5 anytime HA is enabled in a cluster that is also enabled for Virtual SAN, the vSphere HA FDM agents and heartbeating monitoring operations use the Virtual SAN network instead of the Management Network(s).

The modifications to the design and behavior of vSphere HA were made in order to prevent network partition events for non-overlapping partitions as illustrated here:

- HA partition A: hosts esxi-01, 05, 06

- Virtual SAN partition A: hosts esxi-01, 02, 03

- HA partition B: hosts esxi-02, 03, 04

- Virtual SAN partition B: hosts esxi-04, 05, 06

Such partitions are hard to reason about and troubleshoot. They would also have required significant additional HA logic to support.

The 'same networks' constraint leads to simpler partitions. The desired and actual behavior is illustrated here:

- HA & Virtual SAN partition A: hosts esxi-01, 02, 03

- HA & Virtual SAN partition B: hosts esxi-04, 05, 06

In a vSphere 5.5 Virtual SAN enabled cluster, Virtual SAN datastores are not utilized by the vSphere HA agents as a means for monitoring partitioned or isolated hosts. This is because during a partition or isolation event the impacted hosts will not have been able to access the heartbeat information stored on the Virtual SAN datastore.

In a scenario where a partition event occurs, the heartbeat information would have been accessible to only one segment of the cluster therefore defeating the purpose. Virtual SAN utilizes a proprietary mechanism in partition or isolation scenarios that prevents data corruption and as a byproduct, would have prevented all hosts from accessing the heartbeat information.

Heartbeat Datastore Recommendation

Heartbeat datastores are not necessary in a Virtual SAN cluster, but like in a non-Virtual SAN cluster, if available, they can provide additional benefits. VMware recommends provisioning Heartbeat datastores when the benefits they provide are sufficient to warrant any additional provisioning costs.

For example, if you are using converged networking, provisioning Heartbeat datastores can be quite expensive since separate switch infrastructure should be used for providing each host with access to a fault-isolated datastore. Hence, there is a higher cost to realizing the benefits. However, if you already have multiple physical networks, the cost of setting up an iSCSI or NFS datastore could be much lower.

Heartbeat Datastores provide the following benefits:

- They allow vCenter to report the actual state of a partitioned or isolated host rather than reporting that it appears to have failed

- For non-Virtual SAN VMs, they increase the likelihood that a FDM master will respond to a VM that fails after its host becomes partitioned or isolated.

- They prevent vSphere HA from causing VM MAC address conflicts on the VM network after a host isolation or partition when the VM network is not affected by the event. The conflict will exist until the original instance is powered off, which for a partition would occur automatically only after the partition was resolved

Heartbeat datastores provide these benefits by allowing an FDM master agent to determine if a non-responsive host is isolated, partitioned or dead and if alive, which VMs are running on that host.

Vmware 6.5 Heartbeat Datastores

Only use a datastore that all hosts will be able to access during a Virtual SAN network partition or isolation event. If you are already using a non-Virtual SAN datastore in a Virtual SAN cluster, there is no need to add another datastore just for heartbeating if the existing datastores are fault isolated from the Virtual SAN network.

To that end, look at your design holistically. For example, If you add an iSCSI datastore as a Heartbeat datastore and your Management, Virtual SAN and iSCSI vmkernal interfaces all use the same 10GbE link, you won't be getting the same benefit as you would if the Virtual SAN and iSCSI interfaces used different links. In the first example, if the 10GbE link fails even with a Heartbeat datastore, the FDM master won't be able to determine if non-responsive slaves are isolated or dead.

Host Isolation Addresses Recommendations

.png)

The HA agent on a host declares a host isolated if it observes no HA agent to agent network traffic and if attempts to ping the configured isolation addresses fail. Thus, isolation addresses prevent a HA agent from declaring its host isolated if, for some reason, the HA agent cannot communicate with other HA agents, such as the other hosts having failed. HA allows you to set 10 isolation addresses.

- When using Virtual SAN and vSphere HA consider configuring an isolation addresses that will allow all hosts to determine if they have lost access to the Virtual SAN network. For example: utilize the default gateway(s) of the Virtual SAN network(s). Isolation addresses are set using the vSphere HA advanced option das.isolationAddressX.

- Configure HA not to use the default management network's default gateway. This is done using the vSphere HA advanced option das.useDefaultIsolationAddress=false

- If isolation and partitions are possible, ensure one set of isolation addresses is accessible by the hosts in each segment during a partition.

A couple of additional notes regarding the above recommendations:

- If the Virtual SAN network is non-routable and a single-host partition is possible, then provide pingable isolation addresses on the Virtual SAN subnet. If a single host partition is not likely, either provision such an isolation address, or use some of the Virtual SAN network IP address of the cluster hosts as isolation addresses, selecting a subset from any physical partitions in your environment.

- Each Virtual SAN network should be on a unique subnet. Using the same subnet for two VMkernel networks can cause unexpected results. For example, vSphere HA may fail to detect Virtual SAN network isolation events.

Isolation Response Recommendations

The HA isolation response configuration for a VM can be used to ensure the following during a host isolation event:

- To avoid VM MAC address collisions if independent heartbeat datastores are not used. Note: These can be caused by the FDM master restarting an isolated VM resulting in 2 instances of the same VM on the network.

- To allow HA to restart a VM that is running on an isolated host

- To minimize the likelihood that the memory state of the VM is not lost when its host becomes isolated.

The isolation response selection to use depend on a number of factors. These are summarized in the tables below. The tables include recommendations for Virtual SAN and non Virtual SAN virtual machines since clusters may contain a mixture of both.

Decisions Table 1 Download adobe acrobat xi standard with serial number.

Decisions Table 2

Note: 'Shutdown' may also be used anytime 'power off' is mentioned if it is likely that a VM will will retain access to some of its storage but not all during a host isolation. However, note that in such a situation some of its virtual disks may be updated while others are not, which may cause inconsistencies when the VM is restarted. Further, shutdown can take longer than power off.

I want to thank GS Khalsa from the Storage & Availability Technical Marketing Team. He's responsible for availability features such as HA, FT, App HA and SRM, for his contributions and discussion on Virtual SAN and vSphere HA. I also want to thank our engineers Keith Farkas and Manoj Krishnan for validating the accuracy of and contributing greatly to this article.

– Enjoy

For future updates, be sure to follow me on Twitter: @PunchingClouds

Such partitions are hard to reason about and troubleshoot. They would also have required significant additional HA logic to support.

The 'same networks' constraint leads to simpler partitions. The desired and actual behavior is illustrated here:

- HA & Virtual SAN partition A: hosts esxi-01, 02, 03

- HA & Virtual SAN partition B: hosts esxi-04, 05, 06

In a vSphere 5.5 Virtual SAN enabled cluster, Virtual SAN datastores are not utilized by the vSphere HA agents as a means for monitoring partitioned or isolated hosts. This is because during a partition or isolation event the impacted hosts will not have been able to access the heartbeat information stored on the Virtual SAN datastore.

In a scenario where a partition event occurs, the heartbeat information would have been accessible to only one segment of the cluster therefore defeating the purpose. Virtual SAN utilizes a proprietary mechanism in partition or isolation scenarios that prevents data corruption and as a byproduct, would have prevented all hosts from accessing the heartbeat information.

Heartbeat Datastore Recommendation

Heartbeat datastores are not necessary in a Virtual SAN cluster, but like in a non-Virtual SAN cluster, if available, they can provide additional benefits. VMware recommends provisioning Heartbeat datastores when the benefits they provide are sufficient to warrant any additional provisioning costs.

For example, if you are using converged networking, provisioning Heartbeat datastores can be quite expensive since separate switch infrastructure should be used for providing each host with access to a fault-isolated datastore. Hence, there is a higher cost to realizing the benefits. However, if you already have multiple physical networks, the cost of setting up an iSCSI or NFS datastore could be much lower.

Heartbeat Datastores provide the following benefits:

- They allow vCenter to report the actual state of a partitioned or isolated host rather than reporting that it appears to have failed

- For non-Virtual SAN VMs, they increase the likelihood that a FDM master will respond to a VM that fails after its host becomes partitioned or isolated.

- They prevent vSphere HA from causing VM MAC address conflicts on the VM network after a host isolation or partition when the VM network is not affected by the event. The conflict will exist until the original instance is powered off, which for a partition would occur automatically only after the partition was resolved

Heartbeat datastores provide these benefits by allowing an FDM master agent to determine if a non-responsive host is isolated, partitioned or dead and if alive, which VMs are running on that host.

Vmware 6.5 Heartbeat Datastores

Only use a datastore that all hosts will be able to access during a Virtual SAN network partition or isolation event. If you are already using a non-Virtual SAN datastore in a Virtual SAN cluster, there is no need to add another datastore just for heartbeating if the existing datastores are fault isolated from the Virtual SAN network.

To that end, look at your design holistically. For example, If you add an iSCSI datastore as a Heartbeat datastore and your Management, Virtual SAN and iSCSI vmkernal interfaces all use the same 10GbE link, you won't be getting the same benefit as you would if the Virtual SAN and iSCSI interfaces used different links. In the first example, if the 10GbE link fails even with a Heartbeat datastore, the FDM master won't be able to determine if non-responsive slaves are isolated or dead.

Host Isolation Addresses Recommendations

The HA agent on a host declares a host isolated if it observes no HA agent to agent network traffic and if attempts to ping the configured isolation addresses fail. Thus, isolation addresses prevent a HA agent from declaring its host isolated if, for some reason, the HA agent cannot communicate with other HA agents, such as the other hosts having failed. HA allows you to set 10 isolation addresses.

- When using Virtual SAN and vSphere HA consider configuring an isolation addresses that will allow all hosts to determine if they have lost access to the Virtual SAN network. For example: utilize the default gateway(s) of the Virtual SAN network(s). Isolation addresses are set using the vSphere HA advanced option das.isolationAddressX.

- Configure HA not to use the default management network's default gateway. This is done using the vSphere HA advanced option das.useDefaultIsolationAddress=false

- If isolation and partitions are possible, ensure one set of isolation addresses is accessible by the hosts in each segment during a partition.

A couple of additional notes regarding the above recommendations:

- If the Virtual SAN network is non-routable and a single-host partition is possible, then provide pingable isolation addresses on the Virtual SAN subnet. If a single host partition is not likely, either provision such an isolation address, or use some of the Virtual SAN network IP address of the cluster hosts as isolation addresses, selecting a subset from any physical partitions in your environment.

- Each Virtual SAN network should be on a unique subnet. Using the same subnet for two VMkernel networks can cause unexpected results. For example, vSphere HA may fail to detect Virtual SAN network isolation events.

Isolation Response Recommendations

The HA isolation response configuration for a VM can be used to ensure the following during a host isolation event:

- To avoid VM MAC address collisions if independent heartbeat datastores are not used. Note: These can be caused by the FDM master restarting an isolated VM resulting in 2 instances of the same VM on the network.

- To allow HA to restart a VM that is running on an isolated host

- To minimize the likelihood that the memory state of the VM is not lost when its host becomes isolated.

The isolation response selection to use depend on a number of factors. These are summarized in the tables below. The tables include recommendations for Virtual SAN and non Virtual SAN virtual machines since clusters may contain a mixture of both.

Decisions Table 1 Download adobe acrobat xi standard with serial number.

Decisions Table 2

Note: 'Shutdown' may also be used anytime 'power off' is mentioned if it is likely that a VM will will retain access to some of its storage but not all during a host isolation. However, note that in such a situation some of its virtual disks may be updated while others are not, which may cause inconsistencies when the VM is restarted. Further, shutdown can take longer than power off.

I want to thank GS Khalsa from the Storage & Availability Technical Marketing Team. He's responsible for availability features such as HA, FT, App HA and SRM, for his contributions and discussion on Virtual SAN and vSphere HA. I also want to thank our engineers Keith Farkas and Manoj Krishnan for validating the accuracy of and contributing greatly to this article.

– Enjoy

For future updates, be sure to follow me on Twitter: @PunchingClouds

What HA Datastore Heartbeating and how to use it?

When the master host in a vSphere HA cluster can not communicate with a slave host over the management network, the master host uses datastore heartbeating to determine whether the slave host has failed, is in a network partition, or is network isolated. If the slave host has stopped datastore heartbeating, it is considered to have failed and its virtual machines are restarted elsewhere. vCenter Server selects a preferred set of datastores for heartbeating. This selection is made to maximize the number of hosts that have access to a heartbeating datastore and minimize the likelihood that the datastores are backed by the same storage array or NFS server.

In almost every scenario you want to use datastore heartbeating. This is an important mechanism to both protect virtual machine workloads as well as preventing failover from occuring due to a false positive. However, there are situations where you may not want to use datastore heartbeating. One example that comes to mind is a cluster of VMware ESXi Servers that host a critical application but only requires one LUN due to either its size or other factors. However, due to either licensing or the importance of the application you want to dedicate those workloads to a specific cluster that exist to only serve that application. In that scenario with only one datastore vSphere will display a warning. To resolve this you must alter the datastore heartbeat settings for the vSphere cluster.

Steps to configure Datastore Heartbeating

Step 1: Log in to vCenter and view the cluster. You will see a similar warning to the below. Solidworks 32 bit free download with crack.

Vmware Datastore Inactive

Step 2: Right-click the cluster, click Edit Settings, select vSphere HA, and then Advanced Options.

Step 3: Under Option, add an entry for das.ignoreInsufficientHbDatastore and set it's value to true.

Vmware Heartbeat Datastore

Step 4: Right click each host in the cluster with the warning and select Reconfigure for HA.